Projects

Active Projects

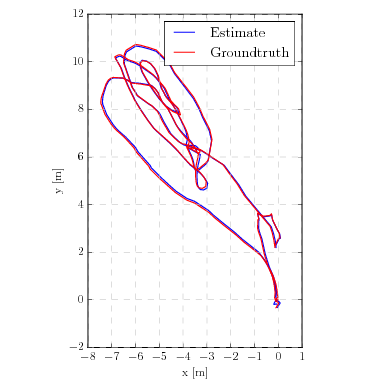

Visual-Inertial Odometry Benchmarking

Visual-Inertial Odometry Benchmarking

I’m currently evaluating the performance of several visual-inertial odometry (VIO) pipelines on a range of hardware (from commodity laptops to embedded single-board computers) in order to understand their tradeoffs in accuracy and computation for vision-based control of flying robots.

[Paper] [Interactive Graphs]

National Centre of Competence in Research (NCCR) Robotics

Since 2016, I have worked with NCCR Robotics, a consortium of university research labs across Switzerland, in the area of rescue robotics. In addition to conducting ongoing research activities and field experiments, I was also the technical director of an integrated demonstration showcasing our collaborative results, which brought together the work of over 20 different researchers. [Video]

DARPA Fast Lightweight Autonomy

For this three-year project sponsored by DARPA (US Department of Defense), we are partnered with the GRASP Lab at UPenn and the Open Source Robotics Foundation on a team working to develop novel perception, navigation, and control algorithms for high-speed of an autonomous flying platform in cluttered and GPS-denied environments.

[Paper] [Video]

Previous Projects

Air-Ground Exploration for Rescue Robotics

In a search and rescue scenario, we consider how to navigate a fast-deploying air-ground robot team in an unknown environment. Our proposed system involved rapid learning of a terrain classifier, and active mapping and 3D reconstruction from the flying robot in order to explore the environment and plan a path for the ground robot.

[Paper 1] [Paper 2] [Video]

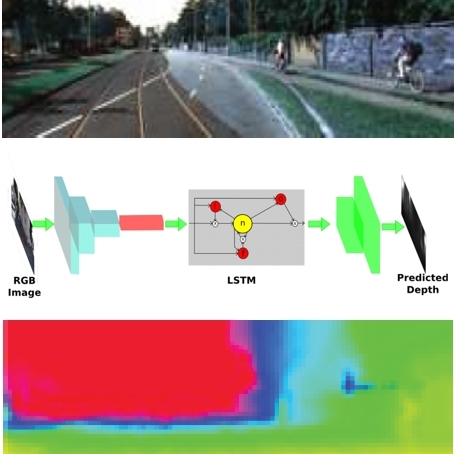

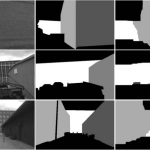

Deep Learning-based Monocular Depth Estimation

We considered the problem of domain independence in monocular depth estimation for mobile robots using deep convolutional networks, and showed some progress toward generalization of trained networks to new environments through training on synthetic, heterogeneous datasets, whose collection and labeling is inexpensive.

[Paper] [Video]

Volumetric 3D Reconstruction

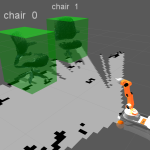

This project considered the problem of next-best-view selection for 3D reconstruction of an object with a volumetric model. We evaluated a number of formulations for quantifying information gain within a volumetric map, and proposed several novel ones. We are able to offer some insight into volumetric information gain for active object reconstruction with a mobile sensor.

[Paper 1] [Paper 2] [Video] [Software]

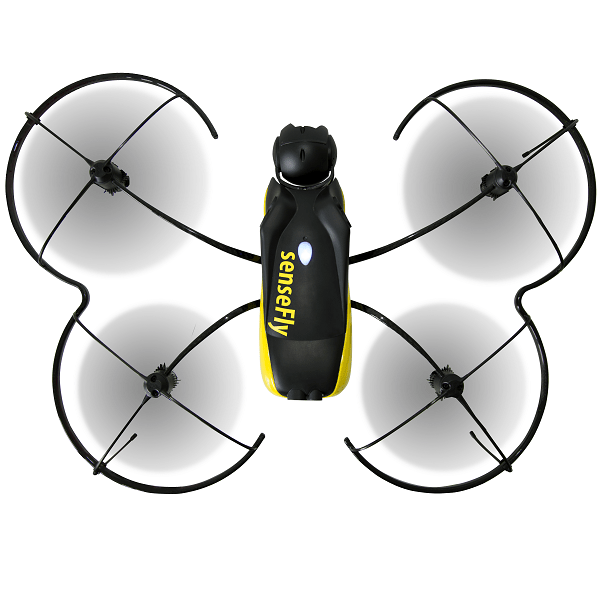

Visual Odometry on the senseFly Albris Drone

As part of a technology transfer project through the Swiss government, I worked with the engineers at senseFly to deploy visual odometry algorithms developed within the Robotics and Perception Group on their professional inspection drone platform Albris (formerly eXom) to enable autonomous behaviors.

[Video]

Online Perception-Aware Path Planning

Vision-based localization systems rely on highly-textured areas for achieving an accurate pose estimation. This path planner exploits the scene’s visual appearance (photometric information and texture) in combination with its 3D geometry to navigate in a previously unknown environment and significantly reduces pose uncertainty over trajectories that do not consider the perception of the robot.

[Paper]

Unmanned Port Security Vehicle (UPSV)

The Unmanned Port Security Vehicle (UPSV) is a low-cost, easily deployable, sensor platform designed for first response and inspection scenarios in shallow water and port environments. I contributed to the development and testing of this system while at the Field Robotics Laboratory at the University of Hawai`i at Manoa.

[Video]

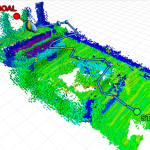

Semantic Object Modeling

Modeling of arbitrary 3D objects from many partial observations. Vision-based object detection and automatic foreground segmentation are applied to RGB-D imagery to extract 3D point cloud data corresponding to the object. Multiple such observations are collected and filtered, resulting in a point cloud model for the object.

[Thesis]

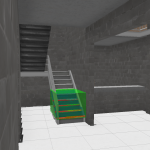

Stairway Modeling

Stairway Modeling

Discovering stairways in the environment and assessing their traversability is important for path planning for stair-climbing robots. RGB-D step edge detections are combined and a generative model is fit to them, enabling the estimation of step dimensions and stairway properties.

[Paper]

Edge Voxels

Edge Voxels

Volumetric edge extraction from point cloud data as an analogue of visual edge detection in images. We apply a 3D structure tensor operation to voxelized spatial data and isolate the voxels corresponding to physical edges in the environment.

[Paper]

Building Facade Modeling

Building Facade Modeling

A top-down method for estimating planar models for building facades in single-view stereo imagery. The dominant planar facades in the scene can be modeled by iteratively fitting a generative model with RANSAC to the stereo data in disparity space and then using a Markov Random Field to label each pixel to one candidate plane (or as background).

[Paper]

Jeff Delmerico

Jeff Delmerico